The viral YouTube Shorts clip of a Somali defendant asking to be deported rather than serve a 30-year sentence is not authentic. An AI-detection tool flagged the video as 99.4% likely to be AI-generated, and visual anomalies—such as a motionless person who appears to be the defense attorney—support that assessment. The uploader's channel contains similar fabricated clips, and a Google Lens reverse-image search found no matches or news coverage. Verdict: synthetic deepfake, not real courtroom footage.

Fact Check: Viral Video Of Somali Defendant Asking To Be Deported Is An AI-Generated Deepfake

A widely shared YouTube Shorts clip that purports to show a Somali defendant asking to be deported rather than serve a 30-year sentence for daycare fraud is not authentic. Multiple lines of evidence indicate the clip was synthetically generated and should not be treated as real courtroom footage.

What The Clip Claims

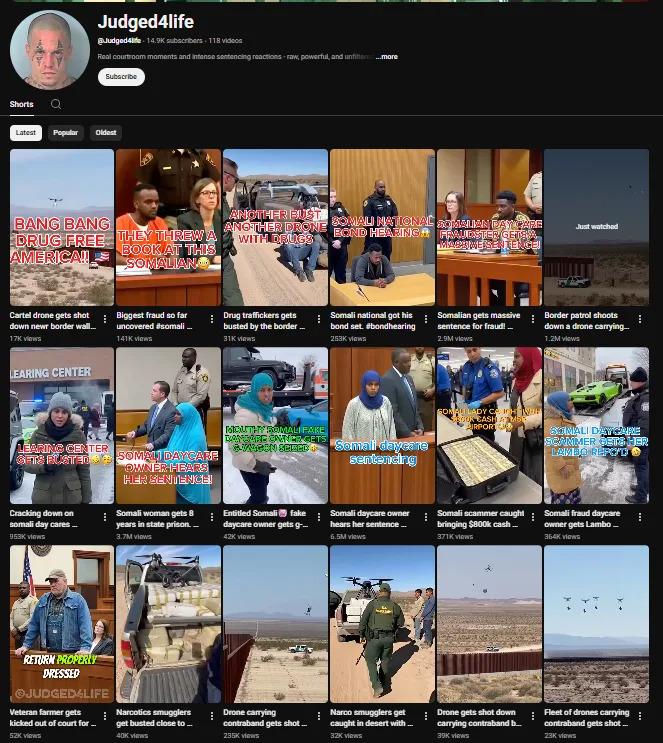

The short video was uploaded to the @Judged4life YouTube channel on January 21, 2026, under the title "Somalian gets massive sentence for fraud! #sentence #prison" and had about 2.5 million views at the time of reporting. The posted transcript reads:

Judge: For committing fraud on a massive scale and using daycares as your cover up, this court sentences you to 30 years in state prison.

Defendant: Can you just deport me? Please?

Judge: Absolutely not. You'll do your full sentence here and will be deported afterwards.

Why The Video Is Not Real

Several clear indicators point to fabrication:

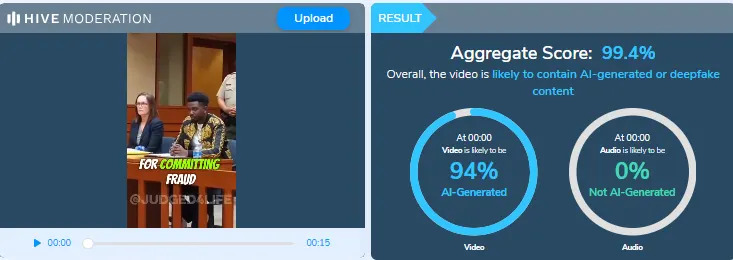

- High AI-detection score: Lead Stories submitted the clip to theHive Moderation AI-content detector, which returned a 99.4% likelihood that the video was AI-generated.

- Visual anomalies: A woman who appears to be the defendant's lawyer remains unnaturally still and never turns or reacts when the defendant pleads—behavior inconsistent with authentic courtroom footage.

- Uploader pattern: The @Judged4life channel contains numerous similar clips and offers no disclaimer that its material is synthetic, suggesting a pattern of fabricated content.

- No independent corroboration: A Google Lens reverse-image search found no matches for any frames, and no reputable news outlets published the footage—something that would be expected if the scene were genuine and newsworthy.

Conclusion

Given the strong AI-detection result, visible inconsistencies in the footage, the uploader's history, and the absence of independent verification, the video should be considered a synthetic deepfake and not authentic court footage.

Tips To Spot Similar Deepfakes

- Examine the uploader's channel for a pattern of sensational or synthetic content and check for creator disclaimers.

- Look for visual cues: frozen or oddly still faces, poor lip-sync, mismatched lighting, or repeating pixels and artifacts.

- Search credible news sites and use reverse-image tools; lack of coverage for an extraordinary clip is a red flag.

- Use AI-detection tools as one input among several—no single tool is definitive.

- When in doubt, do not share; report suspicious content and seek verification from reliable sources.

Verdict: Fake — AI-Generated Deepfake.

Help us improve.