A UK study of 664 volunteers found AI-generated faces are often hard for people to detect. Untrained super-recognizers identified AI faces 41% of the time and typical participants 31%, both below chance. After a five-minute training focused on visual artifacts, super-recognizers’ accuracy rose to 64% while typical participants reached about 51%. The findings suggest brief, targeted training combined with expert human ability could help verify identities and counter fraud.

AI-Generated Faces Fool Most People — 5 Minutes of Training Helps Super-Recognizers Spot Fakes

AI image generators have advanced rapidly and can now produce portraits that often look more convincing than real photographs. A new study from the UK shows that brief, focused training can help certain people — notably super-recognizers — become substantially better at spotting synthetic faces.

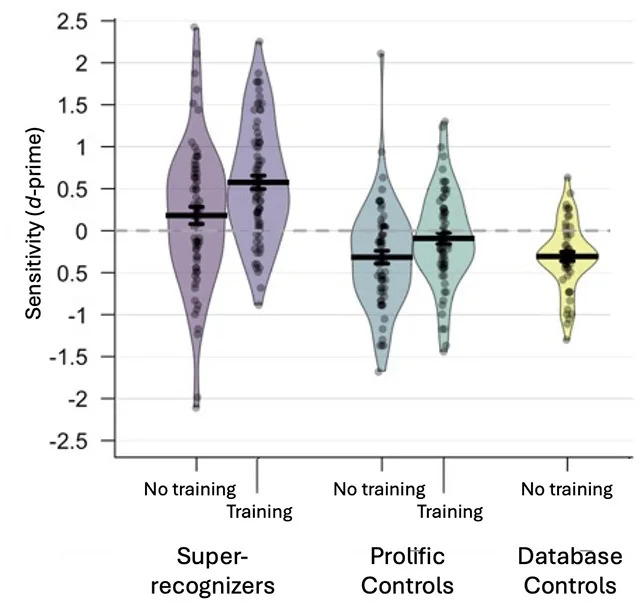

Researchers tested 664 volunteers split into two groups: super-recognizers (individuals who consistently demonstrate exceptional face-recognition ability in prior studies) and people with typical face-recognition skills. Participants completed one of two tasks: either judging a single face as real or AI-generated, or comparing a real face and an AI face side-by-side and identifying the fake. Different volunteers took part in each task, and each experiment was run with and without a short training session.

Key Findings

Both groups struggled to detect AI-generated faces, highlighting how realistic modern AI portraits can appear. Among untrained participants, super-recognizers correctly identified AI faces only 41% of the time, while typical participants managed just 31%. Because half the images were AI-generated, random guessing would yield about 50% accuracy — meaning many volunteers performed worse than chance.

However, a brief five-minute training had a marked effect for super-recognizers. After training, super-recognizers’ accuracy rose to 64%, well above chance; typical participants saw a much smaller improvement, reaching about 51% (roughly at chance).

"AI images are increasingly easy to make and difficult to detect," said Eilidh Noyes, a psychology researcher at the University of Leeds. "They can be used for nefarious purposes, therefore it is crucial from a security standpoint that we are testing methods to detect artificial images."

What the Training Taught

The short training taught participants to look for visual artifacts common in AI-generated faces, such as missing or distorted teeth, unnatural symmetry, and odd blurring or irregular edges around hair and skin. These cues are by-products of how many image generators are trained and refined.

Most contemporary face generators use generative adversarial networks (GANs), in which two algorithms — a generator and a discriminator — compete and iterate until the generator produces highly realistic images. That realism is precisely what makes detection hard for untrained observers.

"Our training procedure is brief and easy to implement," said Katie Gray, a psychology researcher at the University of Reading. "Combining this training with the natural abilities of super-recognizers could help tackle real-world problems, such as verifying identities online."

Why It Matters

AI portraits can be created quickly by nonexperts and are already used in contexts that range from harmless entertainment to harmful fraud — fake dating profiles, social-media impersonations, and identity-theft scams. The study, published in Royal Society Open Science, suggests that targeted, short training combined with specialist human ability could become part of practical defenses against AI misuse.

While training did not make typical participants reliably accurate, the substantial improvement among super-recognizers points to a potential operational approach: deploy trained specialists for high-stakes verification tasks and continue improving automated detection tools.

Help us improve.