The Eedi randomized trial of 165 British students (ages 13–15) found that an AI tutor, supervised by expert human reviewers and given detailed, non-identifying student data, outperformed human-only text tutoring. Students using the supervised AI solved new problems correctly 66.2% of the time versus 60.7% with human tutors and corrected misconceptions over 90% of the time compared with 65% for static replies. The AI produced only five factual errors in 3,617 messages, and tutors approved roughly 75% of AI drafts. Researchers say pedagogically tuned AI plus human oversight could scale personalized tutoring while humans handle complex cases.

Supervised AI Tutors Outperform Human-Only Tutoring in Randomized Trial

An AI-powered tutor, guided by a small team of human experts and fed rich, individualized student data, outperformed human-only tutoring in a randomized trial of British secondary-school pupils, a new study from education-technology company Eedi reports.

The Study

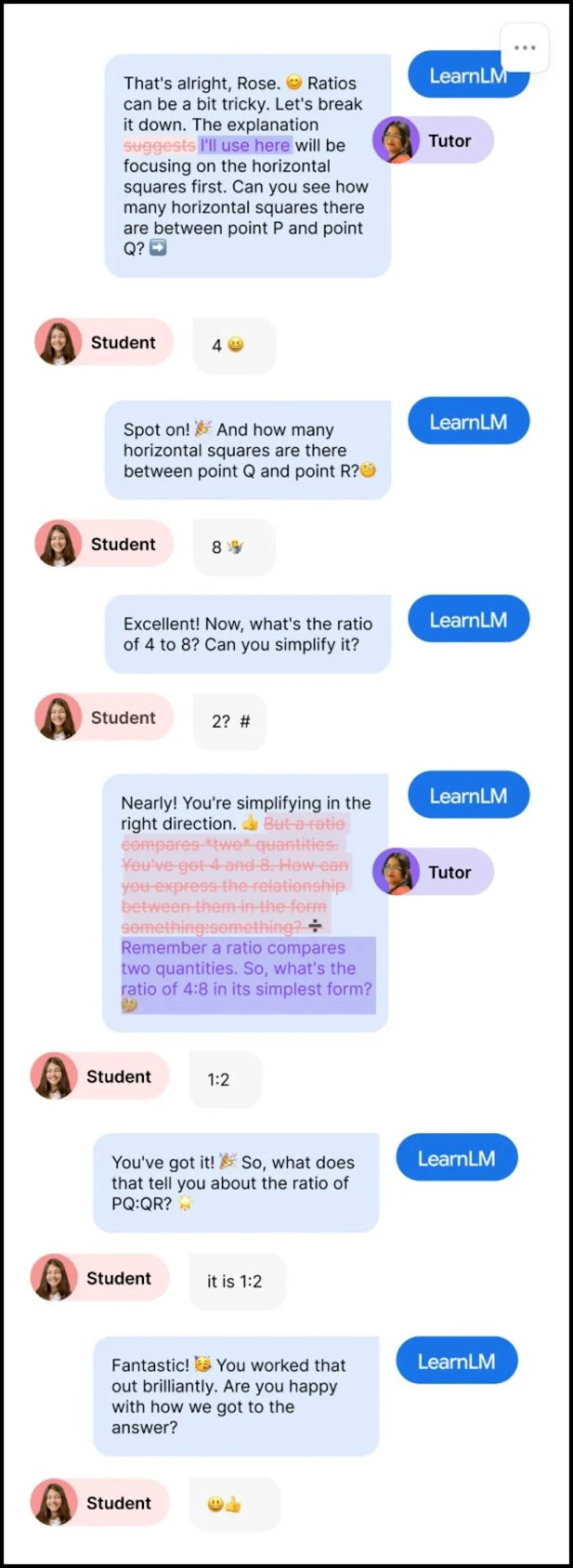

The trial involved 165 students in the U.K., ages 13–15. Eedi paired Google’s LearnLM large language model with a handful of expert tutors. As students worked on math problems through Eedi’s platform, the model drafted reply messages when help was requested. Human tutors reviewed and edited each draft, approving only those messages they would feel comfortable sending themselves. Students did not know whether their conversational partner was AI-driven or human.

Key Results

On follow-up tasks covering new topics, students who used the supervised AI tutor solved problems successfully 66.2% of the time versus 60.7% for students who chatted by text with human tutors alone. When students received the combined AI-and-human support, they corrected misconceptions and supplied correct answers more than 90% of the time, compared with about 65% for static, prewritten responses.

The AI produced only five factual errors — so-called 'hallucinations' — in 3,617 messages (about 0.1%). Tutors accepted roughly three out of four AI-drafted messages with minimal or no edits, and none of the AI outputs raised safety concerns for tutors.

Why the System Worked

Researchers credit the strong results to feeding the model detailed, non-identifiable context about each student’s prior 20 weeks of instruction: topics covered, mastered concepts, typical misconceptions, upcoming curriculum topics, and engagement signals (for example, whether the student watched instructional videos or put effort into questions). That permitted the model to tailor responses—deciding when to push the learner and when to give lighter support—in ways a generic, out-of-the-box LLM could not.

Bibi Groot, Eedi’s chief impact officer: 'They don’t know what misconceptions or topics students are struggling with and what they’ve already mastered, so they can’t dynamically change how they address the topic like a tailored tutor can.'

Practical Benefits and Limits

Human tutors bring important relational and pedagogical skills but are limited by time and cognitive load. Eedi employs about 25 tutors across time zones to cover students from 9 a.m. to 10 p.m., but truly round-the-clock human tutoring would be costly. The AI can process comprehensive context instantly and draft tailored replies, with humans intervening when conversation derails or misconceptions persist.

Context and Expert Reactions

The study, posted Nov. 25 on Eedi’s website and slated for peer review, differs from some recent research: earlier work from Stanford examined AI-assisted human tutoring (humans led sessions with AI support), whereas Eedi placed AI in the driver’s seat with human oversight and approval.

Outside experts welcomed the findings while noting caveats. Robin Lake of the Center on Reinventing Public Education said the results add to evidence that, under controlled conditions, AI can scale tutoring effectively. Liz Cohen of 50CAN praised the study but cautioned that chat-based models may be less suitable for younger children and that student persistence remains a concern: disengaged or frustrated learners may be less likely to persist with AI-driven lessons.

Implications

The trial suggests 'pedagogically fine-tuned' AI, coupled with human oversight and rich non-identifying student data, could expand access to effective, individualized tutoring. Schools might adopt AI as a frontline tutor while reserving human intervention for complex cases—balancing scalability with safety and pedagogy.

Help us improve.