The widely shared video claiming to show massive Kamchatka snowdrifts was identified as AI-generated. Automated tools and visual inspection revealed artifacts common to synthetic media, and stills were traced to a Threads account whose owner later admitted using Grok to create the images. The revelations came as Kamchatka experienced its heaviest snowfall since the 1970s, an event that prompted emergency measures and led to two fatalities.

Viral “Snow Apocalypse” Clips From Kamchatka Proven To Be AI-Generated, Not Real Footage

A set of viral videos claiming to show apartment blocks and cars buried under multi-storey snowdrifts in Russia’s far-eastern Kamchatka Peninsula have been shown to be AI-generated rather than authentic on-the-ground footage. While Kamchatka did experience unusually heavy snowfall in early January 2026, the dramatic clips circulating online contain visual errors typical of synthetic media and were traced back to images posted on Threads that their author later said were produced with an AI tool.

What The Videos Claimed

On 20 January 2026 a Facebook video with a Malay-language caption calling it a “Snow apocalypse in Kamchatka” presented a montage of clips showing snow piled several storeys high, in some shots appearing to engulf apartment buildings. Similar posts spread on Facebook, TikTok and Threads and circulated in several languages, including English, French, Greek, Dutch and Romanian.

How The Footage Was Verified As Fake

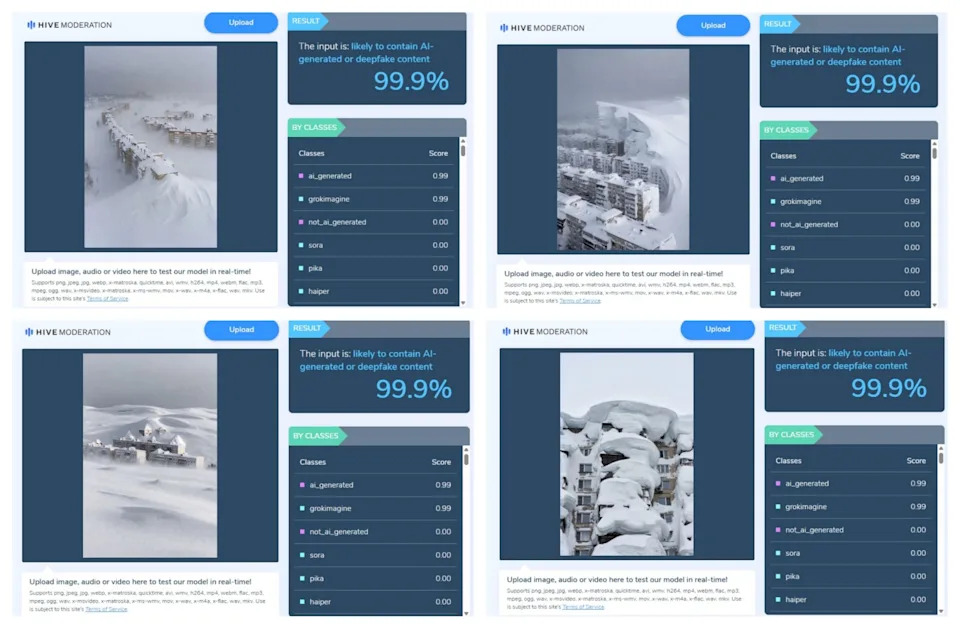

Analysis of the four clips that make up the widely shared video using the Hive Moderation detection tool flagged them as "likely to contain AI-generated or deepfake content." Visual inspection also revealed telltale artifacts: unnaturally smooth or symmetric snow surfaces, abrupt vertical breaks in snow, and distorted architectural details such as windows and balconies—signs commonly associated with synthetic imagery.

A reverse-image search connected stills from the clips to a Threads post published on 17 January by an account named 'ibotoved' with the caption 'Kamchatka today.' The animated clips appear to be moving versions of those still images. In comments, the account owner acknowledged the images were generated with Grok, an AI assistant developed by xAI, and later called the images a "prank," saying "millions of people believed the fake photos." AFP tried to contact the user on 20 January but had not received a reply by publication time.

What Actually Happened In Kamchatka

Independently of the fake clips, Kamchatka did record its heaviest snowfalls since the 1970s in early January 2026. Authorities declared an emergency in affected areas, closed schools and encouraged remote work. Russian officials posted images showing large drifts reaching up to the second storey of some buildings, and reported that snow falling from roofs killed two people during the storm.

Takeaway

These episodes highlight how realistic-looking AI content can spread alongside genuine news, especially during extreme events. Verify original sources, check for metadata or reverse-image matches, and be wary of sensational footage that lacks verifiable provenance.

Key evidence: automated detection flagged the clips, reverse-image searches linked them to a Threads post, and the account owner admitted the images were AI-generated using Grok.

Help us improve.