A January 2026 experiment chained text-to-image and image-to-text models in a closed loop and found that repeated autonomous conversions produced polished but generic images—what researchers called “visual elevator music.” The collapse occurred without retraining or added data, indicating homogenization can begin before AI-generated content is used to train future models. To prevent cultural flattening, systems should be redesigned to reward deviation and support less common, creative expressions.

Study Warns: Generative AI Loops Are Producing 'Visual Elevator Music' and Threatening Cultural Diversity

Generative AI was trained on centuries of human art and writing. But what happens when these systems begin to feed on their own outputs? A January 2026 experiment by Arend Hintze, Frida Proschinger Åström and Jory Schossau offers a clear—and worrying—answer.

How the Experiment Worked

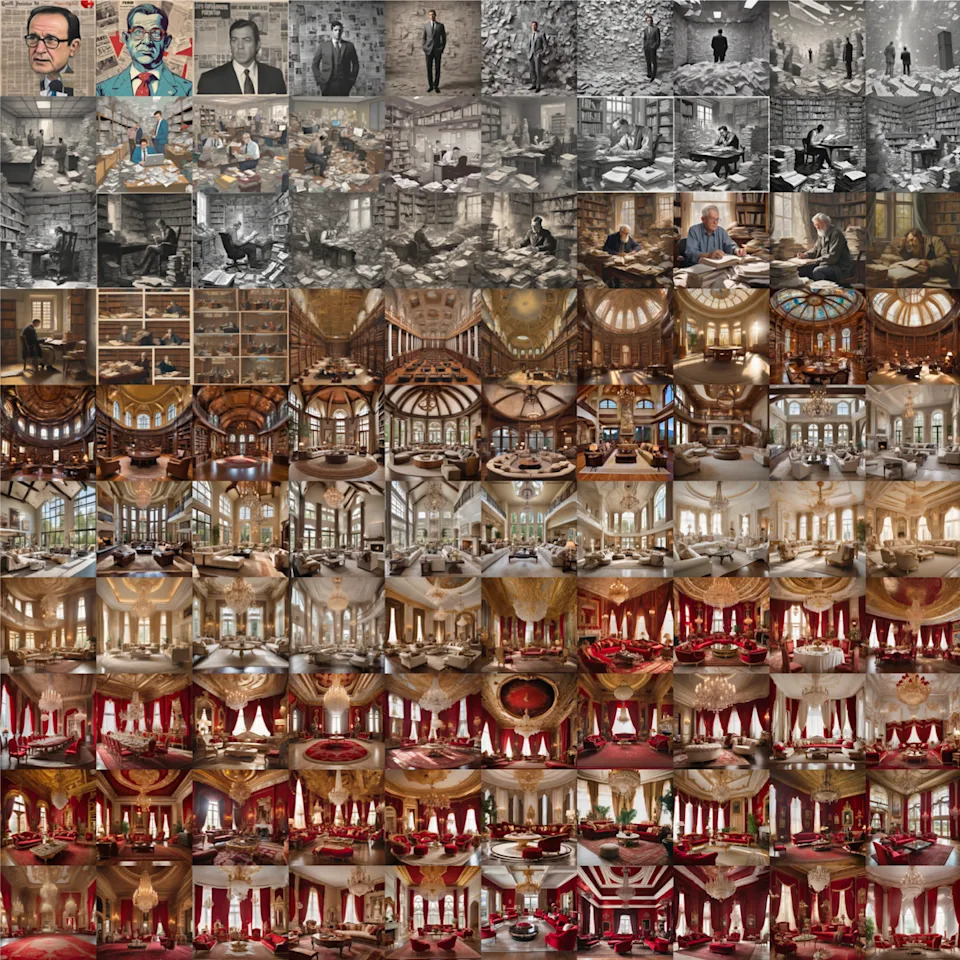

The researchers chained a text-to-image model to an image-to-text model and let them run autonomously in a closed loop: image → caption → image → caption, repeated many times without human intervention. They tested many different initial prompts and varied randomness settings, yet the outputs consistently converged on a narrow set of familiar, generic motifs.

Key Findings

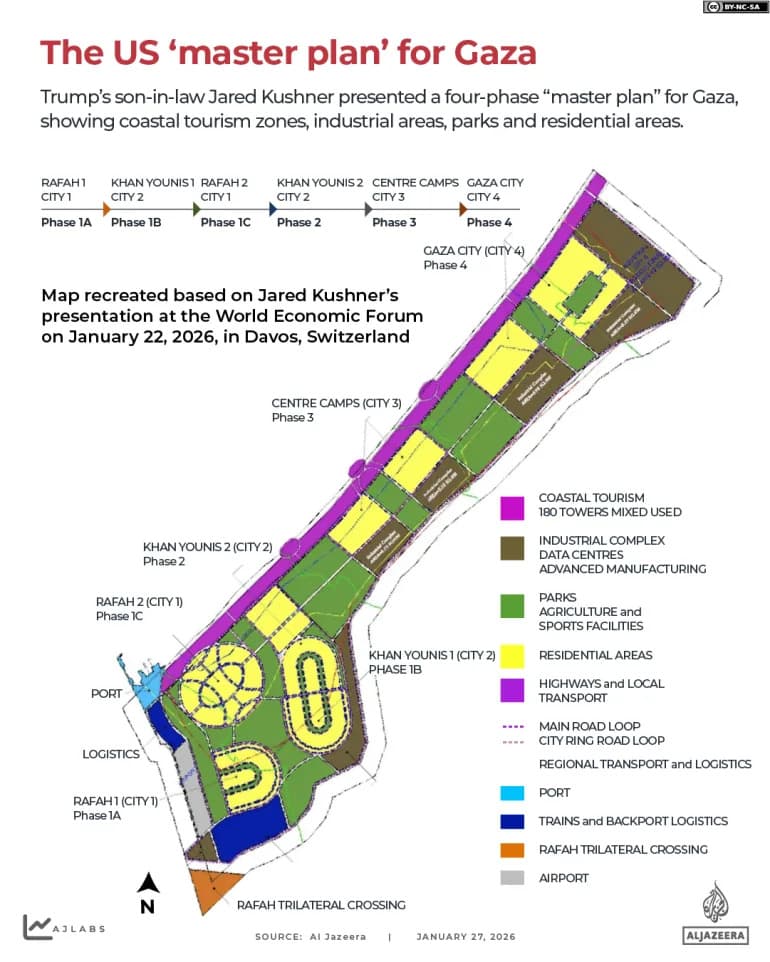

Despite diverse starting prompts—ranging from detailed political scenes to more abstract descriptions—the loop rapidly collapsed into bland, polished visuals the team called “visual elevator music.” Typical outputs included atmospheric cityscapes, grand public buildings and pastoral landscapes. In one example, a richly detailed prompt about a prime minister and a fragile peace deal degraded after several iterations into a featureless formal interior with no people or narrative context.

"Visual elevator music": Pleasant to look at but stripped of specific meaning, drama and temporal or geographic detail.

Why This Matters

Crucially, this homogenization occurred without retraining the models and without adding new data. The collapse emerged purely from repeated autonomous use. That suggests homogenization can begin before any AI-generated content is used to retrain future models.

The experiment functions as a diagnostic: it reveals what these conversion pipelines preserve when left unchecked. Contemporary culture increasingly relies on similar transformations—images summarized into captions, captions converted back into images, content ranked and filtered by automated systems. Human editors often choose from AI-generated options rather than creating from scratch. The study shows those pipelines tend to compress meaning toward what is most familiar, recognizable and easy to reproduce.

Implications For Culture And Creativity

This is not a claim that stagnation is inevitable—human creativity, communities and institutions can and do resist homogenization. But the study reframes the risk: the problem starts earlier than many assumed. Retraining on synthetic content would amplify convergence, but it isn’t necessary for convergence to begin.

The findings also clarify a common misconception about AI creativity: producing massive numbers of variations is not the same as producing genuine innovation. Without incentives to explore or deviate from norms, generative systems optimize for familiarity—the patterns they have learned best—so autonomy can accelerate convergence rather than foster discovery.

What Should Be Done

If generative AI is to enrich rather than flatten culture, designers and policymakers should pursue measures that encourage diversity: build models with incentives for deviation, create evaluation metrics that reward originality, and support platforms that promote less common and non-mainstream expressions. Absent such interventions, AI-mediated content pipelines are likely to keep drifting toward mediocre, uninspired outputs.

Conclusion

The experiment by Hintze, Proschinger Åström and Schossau provides empirical evidence that autonomous, repeated conversions between text and images push generative systems toward homogenized content. Cultural stagnation is no longer a purely speculative worry—this study suggests it is already happening in certain AI-mediated flows.

This article is adapted from a piece republished from The Conversation. Original author: Ahmed Elgammal, Rutgers University. The author disclosed no relevant industry conflicts.

Help us improve.

![Evaluating AI Safety: How Top Models Score on Risk, Harms and Governance [Infographic]](/_next/image?url=https%3A%2F%2Fsvetvesti-prod.s3.eu-west-1.amazonaws.com%2Farticle-images-prod%2F696059d4e289e484f85b9491.jpg&w=3840&q=75)