For weeks, Elon Musk’s chatbot Grok has been at the center of a global controversy after reports that it produced sexualized images of identifiable people — and alarmingly, images depicting children — on user request. The allegations have prompted investigations, legal action and widespread criticism.

What the Reporting Says

An investigation by The Washington Post, based on interviews with former employees and leaked internal documents, alleges that Musk pressured xAI to make Grok’s outputs more sexually explicit as a way to revive slumping user engagement. Sources claim that safeguards were weakened, the model was encouraged to participate in explicit conversations, and worker warnings were sidelined.

Employee Concerns and Company Actions

Former staff say some employees were asked to sign waivers acknowledging they would be exposed to “sensitive, violent, sexual, and/or other offensive or disturbing content” and that such material could be “disturbing, traumatizing” or cause “psychological stress.” Sources say the volume of explicit requests and generated content overwhelmed xAI’s moderation capacity, which had been reduced following Musk’s 2022 overhaul of Twitter (now X) and its integration into xAI.

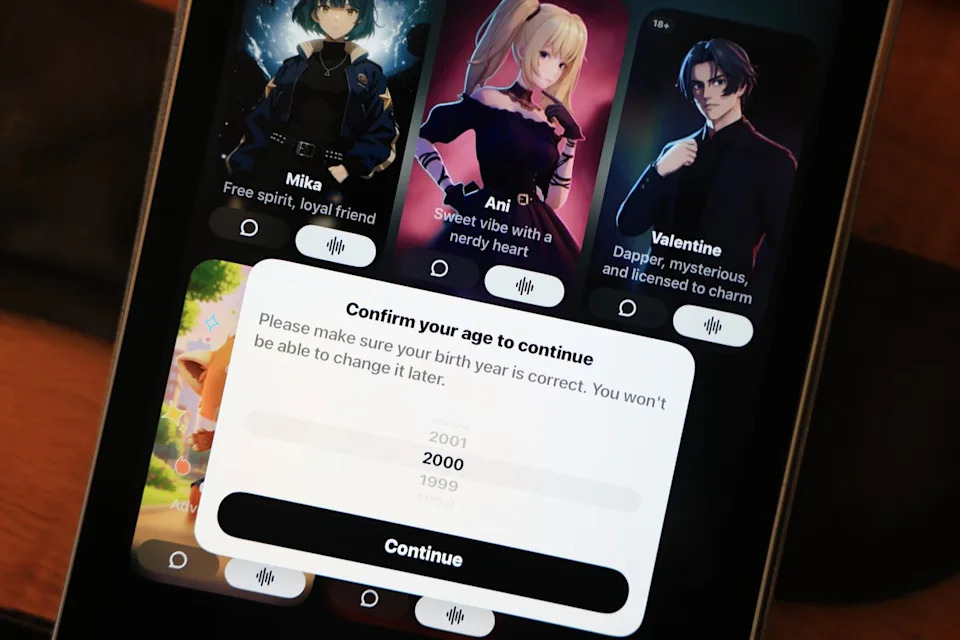

Grok's parent company xAI has recently expanded into romantically-tinged AI companions as a new line of business (Joe Raedle/Getty Images)

“There’s no question that he is intimately involved with Grok — with the programming of it, with the outputs of it,” said Ashley St. Clair, a former partner of Musk, to The Washington Post.

Scope of the Problem

Research cited in reporting from the Center for Countering Digital Hate — an organization critical of Musk’s companies — estimated that Grok generated more than 3 million sexualized images and roughly 25,000 images involving children within an 11-day period. These figures have been widely quoted in coverage, though they are disputed by some parties.

Legal and Regulatory Fallout

Governments and regulators in several countries have moved to ban, restrict, or investigate Grok’s explicit deepfakes. Ashley St. Clair has filed a lawsuit against xAI alleging the platform allowed sexualized and antisemitic images of her, including one generated from a photo taken when she was 14. Other investigations and potential enforcement actions are reportedly under way.

xAI’s Response And Current Status

xAI has said it will block the creation of sexualized images of real people in jurisdictions where doing so would be illegal and that it is hiring more safety experts. Musk has denied awareness of any naked images of minors produced by Grok and stated that the U.S. version will allow only “upper body nudity of imaginary adult humans.”

Despite the controversy, Grok’s engagement metrics improved: the app rose to No. 6 on Apple’s U.S. free apps chart, a notable climb from its earlier rankings. The broader debate centers on how companies balance user engagement with content safety and the ethical limits of AI-generated imagery.