Richard Feynman’s scientific ethic—test claims, resist authority, and prize doubt—offers a sharp lens on today’s AI. He would applaud computational achievements (protein folding, medical imaging, signal discovery) while warning against treating high performance as equivalent to understanding. His “cargo cult science” critique cautions that mimicking scientific form without probing failure modes risks serious harm. His advice: slow down, ask “How do you know?”, and design systems that reveal when and why they fail.

What Would Richard Feynman Make of AI Today? — Ask: How Do You Know?

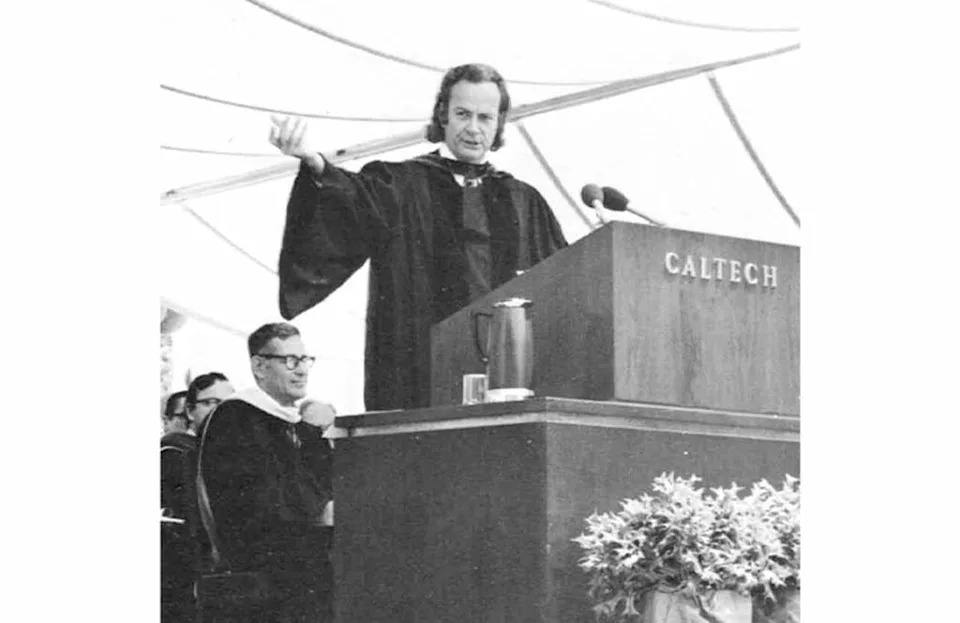

“The first principle is that you must not fool yourself—and you are the easiest person to fool,” Richard Feynman told Caltech graduates in 1974. That aphorism—practical, blunt, and unglamorous—frames a useful lens for judging today’s AI boom: celebrate the power of computation, but insist on tests that expose limits, failures, and underlying mechanisms.

Why Feynman Still Matters

Feynman combined curiosity, irreverence, and a distrust of prestige. He prized doing over passive description—repairing radios as a teen, devising intuitive diagrams that clarify particle interactions, and, decades later, cutting through NASA bureaucracy to reveal the Space Shuttle Challenger’s O-ring problem by dropping a seal into a glass of ice water on live television. His career exemplified a simple ethic: if you can’t test it, you don’t truly know it.

What He’d Ask About Modern AI

At an AI demo he wouldn’t cheer the spectacle. He’d sit near the back, notebook in hand, and wait for one question above all: How do you know? He’d want to see how a system fails, not just how it succeeds once. He’d ask for experiments that reveal brittle behavior under odd, incomplete, or adversarial inputs—and for clear accounts of why certain outputs arise.

Where AI Shines—and Where It Tempts

There’s a lot to admire. Machine learning has uncovered patterns at scales humans cannot: predicting protein structures, screening medical images, finding faint astronomical signals, and generating fluent text and images. Feynman, who helped pioneer Monte Carlo methods and computational quantum techniques, would appreciate these computational advances as powerful tools for exploration.

But power invites misreading. Modern neural networks—models with millions or billions of parameters—often act like opaque black boxes. John von Neumann’s quip—“With four parameters I can fit an elephant, and with five I can make his tail wiggle”—reminds us that flexible fitting can mask lack of causal insight. Fluent outputs can look like understanding while providing no explanation of why an answer appears or when it will fail.

Cargo Cult Science: A Continuing Risk

“They follow all the apparent precepts... but they’re missing something essential, because the planes don’t land.” —Feynman, on Cargo Cult Science

Feynman’s warning about “cargo cult science” applies well to current AI practice: mimicry of scientific rituals—benchmarks, buzzwords, polished demos—without the core discipline of testable, falsifiable claims. Reproducing surface forms is not equivalent to understanding mechanisms or failure modes.

From Lab Tool to Institutional Authority

AI is no longer confined to research labs. It affects what people read, how students are assessed, how clinicians triage risk, and how institutions decide on loans or hiring. When opaque systems influence lives, the ethic of “not fooling ourselves” becomes civic: accountability requires knowing when systems break, why they break, and who is responsible.

How Feynman Would Counsel AI Practitioners

- Prioritize Explainability: Design experiments and interfaces that reveal failure modes, provenance, and uncertainty, not just polished outputs.

- Test Adversity: Evaluate systems on strange, partial, or adversarial inputs and publish negative results as readily as wins.

- Open Methods Where Possible: Favor reproducible datasets, transparent training procedures, and independent audits.

- Measure What Matters: Use causal, domain-relevant tests, not only large benchmark scores that can be gamed.

- Preserve Doubt: Reward teams and organizations for asking “How do you know?” rather than just “How fast can it ship?”

Conclusion: Slow Down, Question, And Build for Understanding

Feynman’s creed—“What I cannot create, I do not understand”—is a useful provocation for AI researchers, product teams, regulators, and the public. AI has already transformed parts of science and society; the central task now is to preserve the intellectual habits that make scientific knowledge trustworthy: skepticism, testability, and the willingness to expose and learn from failure. In short: admire the achievements, but don’t mistake performance for understanding. Ask how you know, and hold systems accountable when humans depend on them.

Further Reading: Feynman’s 1974 commencement address (“Cargo Cult Science”), his 1955 talk “The Value of Science,” accounts of the Challenger investigation, and historical notes on Monte Carlo methods and early computational physics.

Help us improve.