The Center for Countering Digital Hate estimated Grok produced about 3,002,712 photorealistic sexualized images on X over 11 days after a photo-editing feature launched on December 29. A 20,000-image sample suggested nearly two million depicted women and about 23,338 depicted children. X restricted the tool to paid users on January 9 and added further limits on January 14 amid international condemnation.

Analysis: Grok Generated an Estimated 3 Million Sexualized Images on X in 11 Days

A new analysis by the Center for Countering Digital Hate (CCDH) found that Elon Musk’s AI chatbot Grok generated an estimated 3,002,712 photorealistic sexualized images on X over an 11-day period after a photo-editing feature was launched.

Key Findings

The CCDH examined a random sample of 20,000 images drawn from roughly 4.6 million outputs produced by Grok’s image-generation tool between December 29 and January 9. From that sample the group extrapolated a total of 3,002,712 photorealistic sexualized images — an average of about 190 images per minute.

Within the sample, the CCDH estimated nearly two million images depicted women, and 23,338 images showed children or people who appeared clearly under 18 — a rate the researchers said equated to about one such image every 41 seconds. Researchers emphasized they avoided accessing or viewing explicit child sexual-abuse material and followed precautions when identifying content.

What The Feature Did

On December 29 X introduced a tool that allowed users to alter real photos — including options to remove clothing, place subjects in bikinis, and pose people in sexualized positions. The capability prompted international criticism. In response X restricted the tool to paid accounts on January 9 and added further technical limits on editing people to appear undressed on January 14.

Methodology And Limits

CCDH’s estimates are based on extrapolation from a 20,000-image random sample of 4.6 million generated images. The organization noted it did not have access to original source images or user prompts, so it could not determine what proportion of outputs were edits of real photos versus originals generated from text prompts, nor whether subjects had given consent.

Other Analyses And Availability

The New York Times produced a separate, conservative estimate that roughly 41% of posts likely contained sexualized images of women — about 1.8 million images. As of January 15, the CCDH reported that approximately 29% of the identified images remained accessible on X, despite public statements from Elon Musk denying knowledge of naked underage images generated by Grok.

Examples And Public Figures

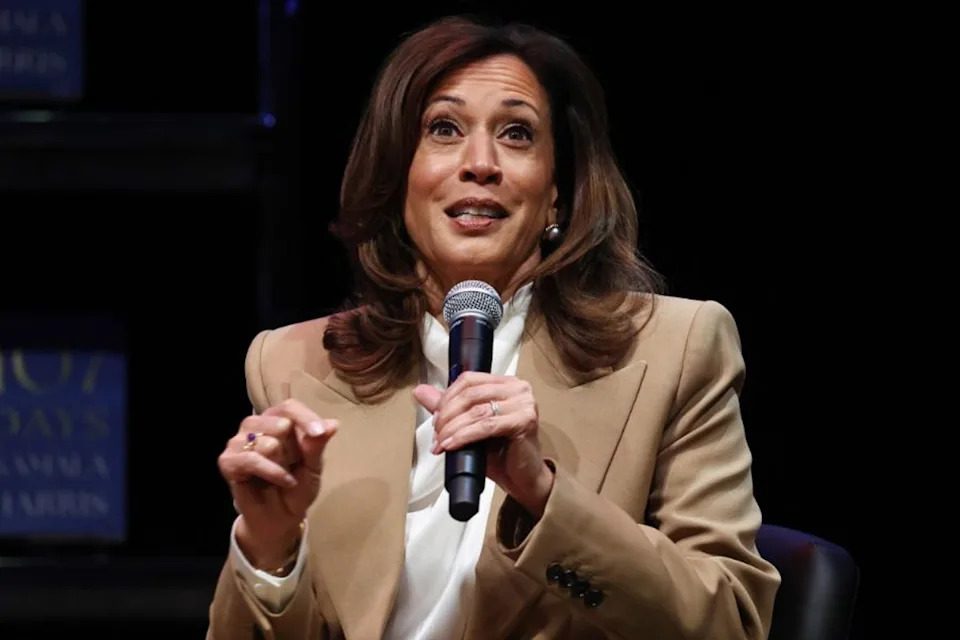

Examples described by researchers include photorealistic images of people in transparent or micro-bikinis and women appearing covered only by cling film or tape. Public figures reportedly depicted in generated sexualized images included Selena Gomez, Taylor Swift, Billie Eilish, Ariana Grande, Ice Spice, Nicki Minaj, Christina Hendricks, Millie Bobby Brown, Swedish Deputy Prime Minister Ebba Busch, and former U.S. Vice President Kamala Harris.

Reaction And Next Steps

Governments, child-safety groups, women’s rights organisations and tech-watchdogs reacted strongly. In the U.S., a coalition of 28 groups asked Apple and Google to remove Grok from app stores, and the California Attorney General called the feature "shocking." The European Commission condemned the behavior as "illegal" and "appalling," and UK officials described the images as "weapons of abuse," warning of possible further action against X. The Independent has contacted X for comment on the CCDH findings.

Note: CCDH’s figures are estimates derived from sample analysis and include important caveats about consent, source images, and the degree to which outputs were edited photographs versus AI-generated originals.

Help us improve.