Public fear about AI is high, but its quieter scientific applications could be transformative. Evidence shows idea production is slowing and innovation is becoming less disruptive, creating long-term headwinds to growth. AI tools — from AlphaFold’s protein structures to DeepMind’s GNoME and GraphCast, and experimental systems like Coscientist and FutureHouse’s Robin — can amplify researchers by reading, planning and even running experiments. If governed responsibly, AI-for-science could lower costs in health and energy and revive innovation, though it also raises risks like errors and dual use.

Running Out of Good Ideas? How AI Could Become Science’s Secret Weapon

Public anxiety about artificial intelligence is high — and for understandable reasons. Surveys show many people worry more about AI than they’re excited by it, and high-profile debates often focus on job loss, misinformation, privacy and dystopian futures. But those headline-grabbing risks tell only part of the story. There is a quieter, potentially transformative set of AI applications that could directly help solve some of the biggest bottlenecks to innovation: scientific research.

Why New Ideas Are Getting Harder

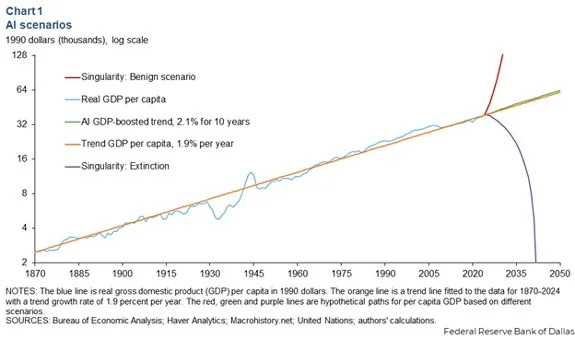

There is mounting evidence that generating breakthrough ideas is becoming more difficult. In the influential paper “Are Ideas Getting Harder to Find?,” economist Nicholas Bloom and colleagues show that sustaining historical productivity growth now requires vastly more researchers and R&D spending — in other words, we have to row harder just to stay in place. A 2023 Nature analysis of 45 million papers and nearly 4 million patents found research today is, on average, less disruptive than in prior decades.

Demographic trends add another headwind: low fertility in wealthy countries and a likely global population plateau could reduce the total number of people producing new ideas. Restrictive immigration policies that limit incoming scientific talent make the problem worse by shrinking the pool of researchers when talent mobility matters most.

Information Overload — And a Role for AI

Ironically, one of science’s biggest bottlenecks is abundance: researchers are drowning in data and literature and lack the time to read, synthesize and test every promising lead. That kind of repetitive, data-intensive work is precisely what modern AI systems are built to do, which is why many scientists now talk about “AI as a co‑scientist.”

Concrete Wins: Proteins, Materials and Weather

AlphaFold. DeepMind’s AlphaFold predicts protein 3D structures from amino-acid sequences — a task that once required months or years of painstaking lab work for each protein. AlphaFold’s predictions, now widely available in public databases, have dramatically accelerated drug, vaccine and enzyme design. The work behind AlphaFold was recognized with the 2024 Nobel Prize in Chemistry, awarded to Demis Hassabis and John Jumper of DeepMind alongside computational biologist David Baker, with AlphaFold’s predictions playing a central role in that recognition.

GNoME and Materials Discovery. In 2023 DeepMind introduced GNoME, a graph neural network trained on crystal data that proposed roughly 2.2 million new inorganic crystal structures and flagged about 380,000 as likely stable — compared with roughly 48,000 stable inorganic crystals previously confirmed. That compressed hundreds of years’ worth of discovery into a single computational effort and broadened the search for cheaper batteries, more efficient solar cells, better semiconductors and stronger construction materials.

GraphCast and Weather Forecasting. DeepMind’s GraphCast learns from decades of atmospheric data and can produce a global 10‑day forecast in under a minute, outperforming many conventional models. Better forecasts can improve disaster preparedness, agriculture planning and everyday weather services.

From Analysis To Experimentation

AI is not only helping researchers read and reason faster — it can also plan and run experiments. Carnegie Mellon researchers described Coscientist in a 2023 Nature paper: a large-language-model–based “lab partner” that reads hardware documentation, plans multistep chemistry experiments, writes control code and coordinates instruments in an automated lab. Coscientist orchestrates robots that mix chemicals and collect data, an early step toward so-called self‑driving labs.

Nonprofits and startups are building broader research assistants. FutureHouse — an Eric Schmidt–backed nonprofit — has launched specialized agents (Crow for general Q&A, Falcon for deep literature reviews, Owl for prior-art checks, and Phoenix for chemistry workflows) and combined them into Robin, an end‑to‑end AI scientist. In case studies, Robin helped identify a repurposing candidate for age‑related macular degeneration and proposed follow-up experiments that human researchers executed and validated.

What This Could Mean — And The Risks

When AI systems handle literature review, search, hypothesis generation and parts of experimental design, each human researcher effectively becomes more productive. That amplification could revive innovation even if hiring more researchers becomes harder: cheaper drug discovery, new energy materials, improved climate models and better forecasting are all plausible outcomes that would lower costs and improve resilience.

But these gains come with important caveats. Language models can confidently misstate or overgeneralize scientific findings; recent evaluations show they sometimes summarize papers incorrectly. Dual‑use risks are real: tools that speed vaccine design could also be misapplied to harmful biological or chemical research. Wiring AI to lab equipment without strict safeguards could accelerate both beneficial and dangerous experiments faster than humans can audit them.

Policy And The Middle Path

The public’s caution about AI is warranted, but the political response should be nuanced. Rather than banning AI wholesale or treating it as a techno‑panacea, policy should promote responsible deployment — directing AI resources toward scientific priorities that move the needle on health, energy, climate resilience and public goods, while investing in robust oversight, safety standards and access controls to mitigate misuse.

Bottom line: AI won’t magically replace human creativity, but used responsibly it can act as invisible infrastructure — multiplying researchers’ effectiveness, accelerating discovery, and helping restore momentum in innovation where it’s needed most.

This reporting was supported by a grant from Arnold Ventures. Vox had full editorial discretion.